RAIL Group Research

Learning and Introspection for Effective and Reliable Planning Under Uncertainty: Towards Household Robots Comfortable with Missing Knowledge

As a human, you often plan with missing information without conscious thought. Perhaps you are in an unfamiliar building when a fire alarm goes off, or you are in a newly-opened supermarket equipped with only a grocery list. Despite missing key pieces of information about the world, you know what to do: you look for exit signs, or find the nearest staircase, or start walking up and down the aisles. You often have visibility over what knowledge you lack or what part of the world you have yet to see, and take action to reduce this uncertainty: using your understanding of how the world typically works to act effectively, update your judgements, and anticipate the outcome of your actions.

In the pursuit of more capable robots, much of our research in the Robotic Anticipatory Intelligence & Learning Group (

See also our full publication list.

Areas

- Planning in Partially Revealed Environments

- Multi-Robot Long-Horizon Planning Under Uncertainty

- Anticipatory Planning

- Introspection & Deployment-Time Improvement Despite Uncertainty

- Explainable Planning Under Uncertainty

- (Inactive) Mapping for Planning

Planning in Partially Revealed Environments

So far, my work has focused on the tasks of navigation and exploration: problem settings in which humans have incredibly powerful heuristics yet robots have historically struggled to perform as well. More generally, I am interested in the ways that we can imbue a robot with the ability to predict what lies beyond what it cas see, so that it can make more informed decisions when planning when much of the environment is not known.

Selected Relevant Publications

- Gregory J. Stein “Generating High-Quality Explanations for Navigation in Partially-Revealed Environments” In: Advances in Neural Information Processing Systems (NeurIPS). 2021. talk (13 min), GitHub.

- Christopher Bradley, Adam Pacheck, Gregory J. Stein, Sebastian Castro, Hadas Kress-Gazit, and Nicholas Roy. “Learning and Planning for Temporally Extended Tasks in Unknown Environments.” In: International Conference on Robotics and Automation (ICRA). 2021. paper.

- Gregory J. Stein, Christopher Bradley, and Nicholas Roy. “Learning over Subgoals for Efficient Navigation of Structured, Unknown Environments”. In: Conference on Robot Learning (CoRL). 2018. paper, talk (14 min). Best Paper Finalist at CoRL 2018; Best Oral Presentation at CoRL 2018.

Best Paper Finalist at CoRL 2018; Best Oral Presentation at CoRL 2018.

Related Blog Posts

-

16 Dec 2018

Research DeepMind's AlphaZero and The Real World[Research] Using DeepMind’s AlphaZero AI to solve real problems will require a change in the way computers represent and think about the world. In this post, we discuss how abstract models of the world can be used for better AI decision making and discuss recent work of ours that proposes such a model for the task of navigation.

Multi-Robot Long-Horizon Planning Under Uncertainty

We also have a body of work on multi-robot planning, which further develops our abstractions for long-horizon planning under uncertainty to support concurrent progress made by a centrally-coordinated team of heterogeneous robots. Uncertainty further complicates the challenge of multi-robot planning: good behavior involves deciding what each robot should do over long time horizons, which requires careful consideration of when and how each should reveal unseen space and make progress towards completing the team’s joint objective.

Building on our work in learning-informed planning for single robots, our work in this forcus develops multi-robot state and actions abstractions that integrate learning with model-based planning for effective team behaviors. Our work has so far targeted complex multi-object retrieval and interaction tasks, and affords both reliability and performance, outperforming competitive learned and non-learned baseline strategies.

Anticipatory Planning

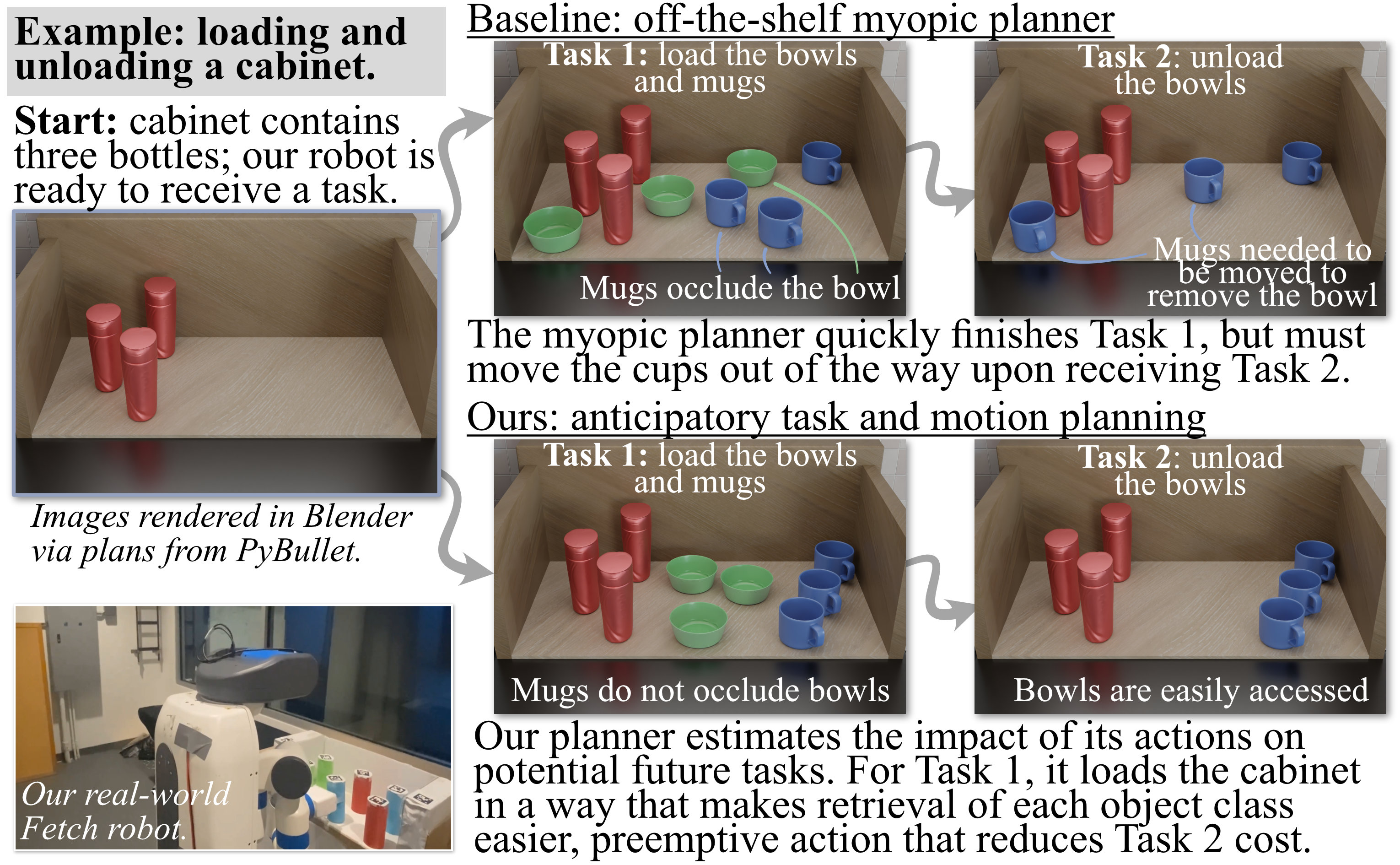

Robots will be expected to complete tasks in environments that persist over time, which means that the robots actions to complete an immediate task may impact subsequent tasks the robot has not yet been assigned. Most planners myopically aim to quickly immediate task without regard to what the robot may need to do next, owing to both the lack of advance knowledge of subsequent tasks and the would-be computational challenges associated with considering them.

Instead, our anticipatory planning approach uses learning to estimate the expected future cost associated with a particular solution strategy, information we can use to find plans that both quickly complete the current objective and also preemptively pay down cost on possible future tasks. Experiments of ours have shown that our approach results in many forward-thinking behaviors difficult to achieve otherwise—organization, tidiness, preparation, and more—exciting opportunities for improving robots that will serve as long-lived assistive companions.

Selected Relevant Publications

- Roshan Dhakal, Duc M. Nguyen, Tom Silver, Xuesu Xiao, and Gregory J. Stein. “Anticipatory Task and Motion Planning.” Robotics and Automation Letters (RA-L). 2025. in press.

ArXiv link show bibtex

- Md Ridwan Hossain Talukder, Raihan Islam Arnob, and Gregory J. Stein. “Anticipatory Planning for Performant Long-Lived Robot in Large-Scale Home-Like Environments.” In: International Conference on Robotics and Automation (ICRA). 2025. ArXiv link. show bibtex

Introspection & Deployment-Time Improvement Despite Uncertainty

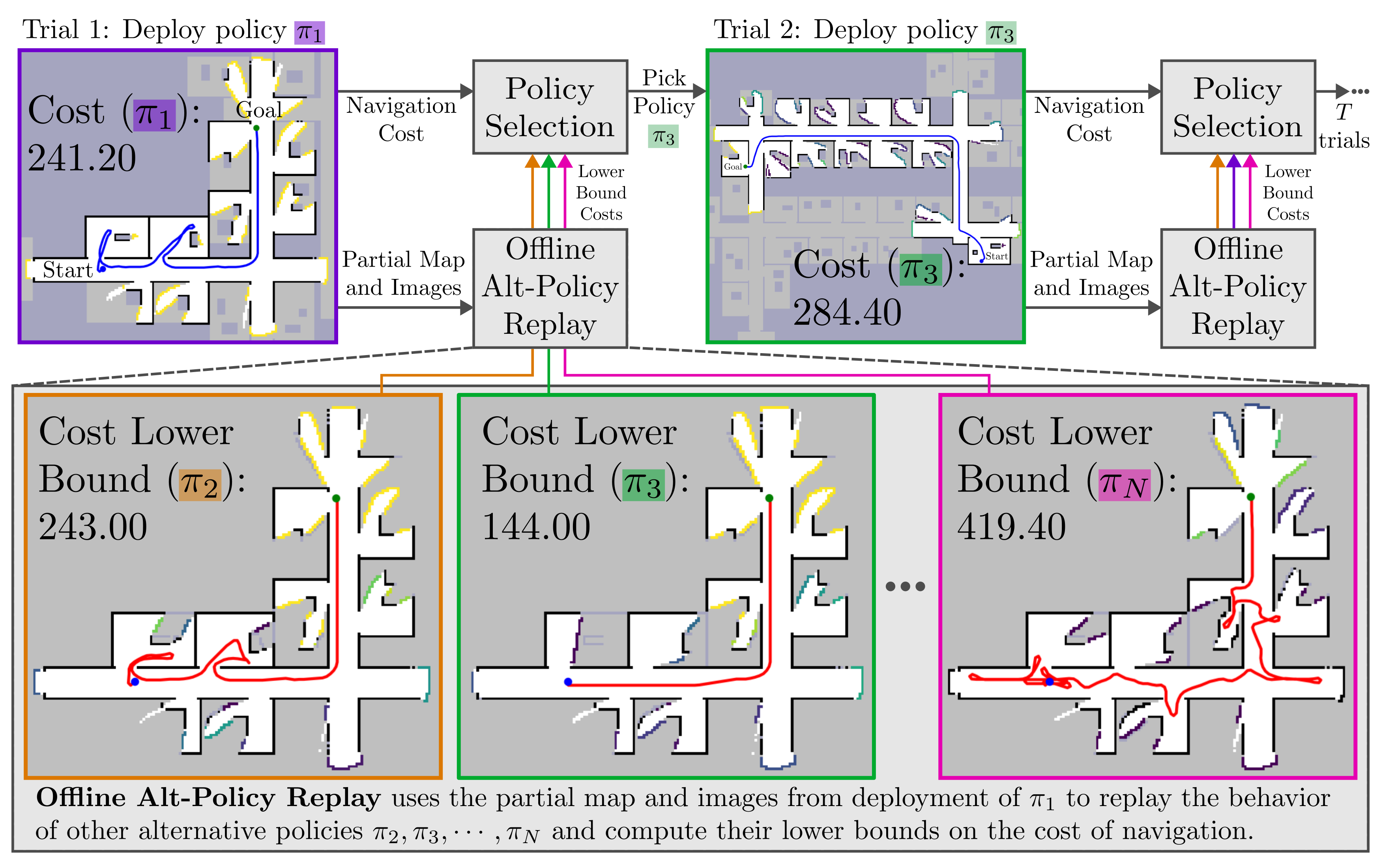

Particularly when acting in uncertain and unknown environments, it is not sufficient to simply deploy robots and expect them to perform well. Robust long-horizon performance requires that robots regularly self-evaluate and introspect and tune their behavior appropriately based on their experience.

This focus develops tools for deployment-time introspection, enabling robots to quickly and reliably select the best-performing of a set of policies despite pervasive uncertainty. Our work is designed to operate across task planning settings and is flexible to support a variety of strategies that make predictions about what the robot does not know—from expert-designed heuristics to learned and language-model-informed predictive models—affording robust and data efficient adaptation despite uncertainty.

Aspects of this work are supported by a $500k grant from the

Selected Relevant Publications

- Abhishek Paudel, Xuesu Xiao, and Gregory J. Stein. “Multi-Strategy Deployment-Time Learning and Adaptation for Navigation under Uncertainty.” In: Conference on Robot Learning (CoRL). 2024. paper, video. show bibtex

- Abhishek Paudel and Gregory J. Stein. “Data-Efficient Policy Selection for Navigation in Partial Maps via Subgoal-Based Abstraction.” In: International Conference on Intelligent Robots and Systems (IROS). 2023. paper, blog post. show bibtex

Related Blog Posts

-

30 Sep 2023

Research Data-Efficient Policy Selection for Navigation in Partial Maps[Research] We present a fast and reliable policy selection approach for navigation in partial maps that leverages information collected during deployment to introspect the behavior of alternative policies without deployment.

Explainable Planning Under Uncertainty

If we are to welcome robots into our homes and trust them to make decisions on their own, they should perform well despite uncertainty and be able to clearly explain both what they are doing and why they are doing it in terms that both expert and non-expert users can understand. As learning (particularly deep learning) is increasingly used to inform decisions, it is both a practical and an ethical imperative that we ensure that data-driven systems are easy to inspect and audit both during development and after deployment. Yet designing an agent that both achieves state-of-the-art performance and can meaningfully explain its actions has so far proven out of reach for existing approaches to long-horizon planning under uncertainty. In our work, we develop new representations for planning that allow us to leverage machine learning to inform good decisions and have sufficient structure so that the agent’s planning process is easily explained.

Relevant Publications

- Gregory J. Stein. “Generating High-Quality Explanations for Navigation in Partially-Revealed Environments.” In: Advances in Neural Information Processing Systems (NeurIPS). 2021. paper, talk (13 min), GitHub, blog post. show bibtex

- Gregory J. Stein, Christopher Bradley, and Nicholas Roy. “Learning over Subgoals for Efficient Navigation of Structured, Unknown Environments”. In: Conference on Robot Learning (CoRL). 2018. paper, talk (14 min).

Related Blog Posts

-

05 Nov 2021

Research Generating high-quality explanations for navigation in partially-revealed environments[Research] We generate explanations of a robot agent’s behavior as it navigates through a partially-revealed environment, expressed in terms of changes to its predictions about what lies in unseen space. Blog post accompanying our NeurIPS 2021 paper.

(Inactive) Mapping for Planning

In our Planning under Uncertainty work, high-level (topological) strategies for navigation meaningfully reduce the space of possible actions available to a robot, allowing use of heuristic priors or learning to enable computationally efficient, intelligent planning. The challenges in estimating structure with many existing techniques that aim to build a map of the environment from monocular vision in low texture or highly cluttered environments have precluded their use for topological planning in the past. In our research, we proposed a robust, sparse map representation that we built with monocular vision and that overcomes these shortcomings. Using a learned sensor, we estimated high-level structure of an environment from streaming images by detecting sparse vertices (e.g., boundaries of walls) and reasoning about the structure between them. We also estimate the known free space in our map, a necessary feature for planning through previously unknown environments. Our mapping technique can also be used with real data and is sufficient for planning and exploration in simulated multi-agent search and Learned Subgoal Planning applications.

Relevant Publications

- Gregory J. Stein, Christopher Bradley, Victoria Preston, and Nicholas Roy. “Enabling Topological Planning with Monocular Vision.” In: International Conference on Robotics and Automation (ICRA). 2020. paper, talk (10 min). show bibtex