Improving Reliable Navigation under Uncertainty with Non-Local Information Informed Predictions

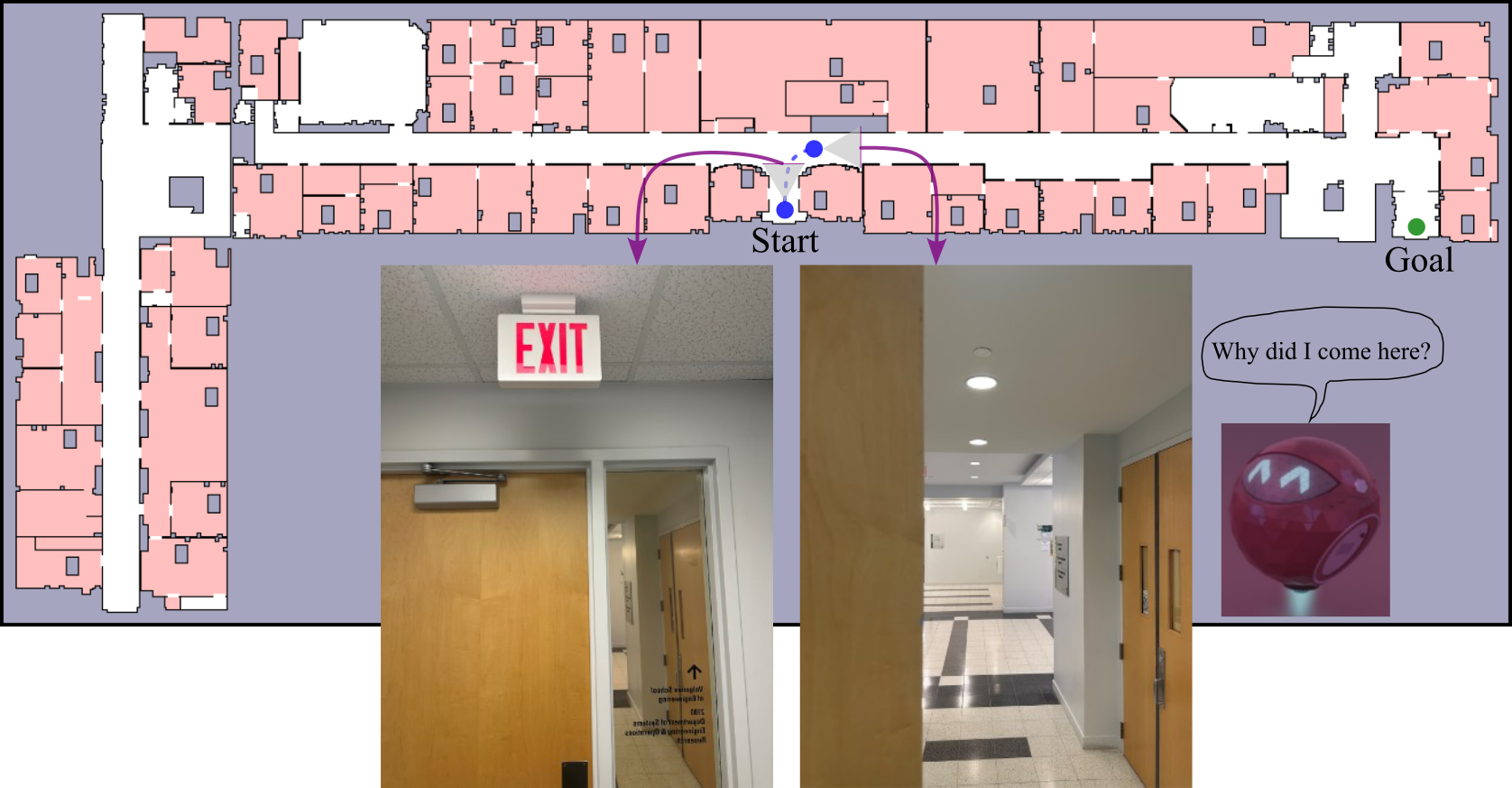

We focus on the task of goal-directed navigation in a partially-mapped environment, in which a robot is expected to reach an unseen goal in minimum expected time. To perform well, a robot must understand how parts of the environment the robot cannot currently see (i.e., non-locally available information) to inform where it should go next, a challenging problem for many existing planning strategies that rely on learning. For example, consider the following:

To navigate efficiently, the robot may need to remember what it has seen and make use of that information to inform its decision-making.

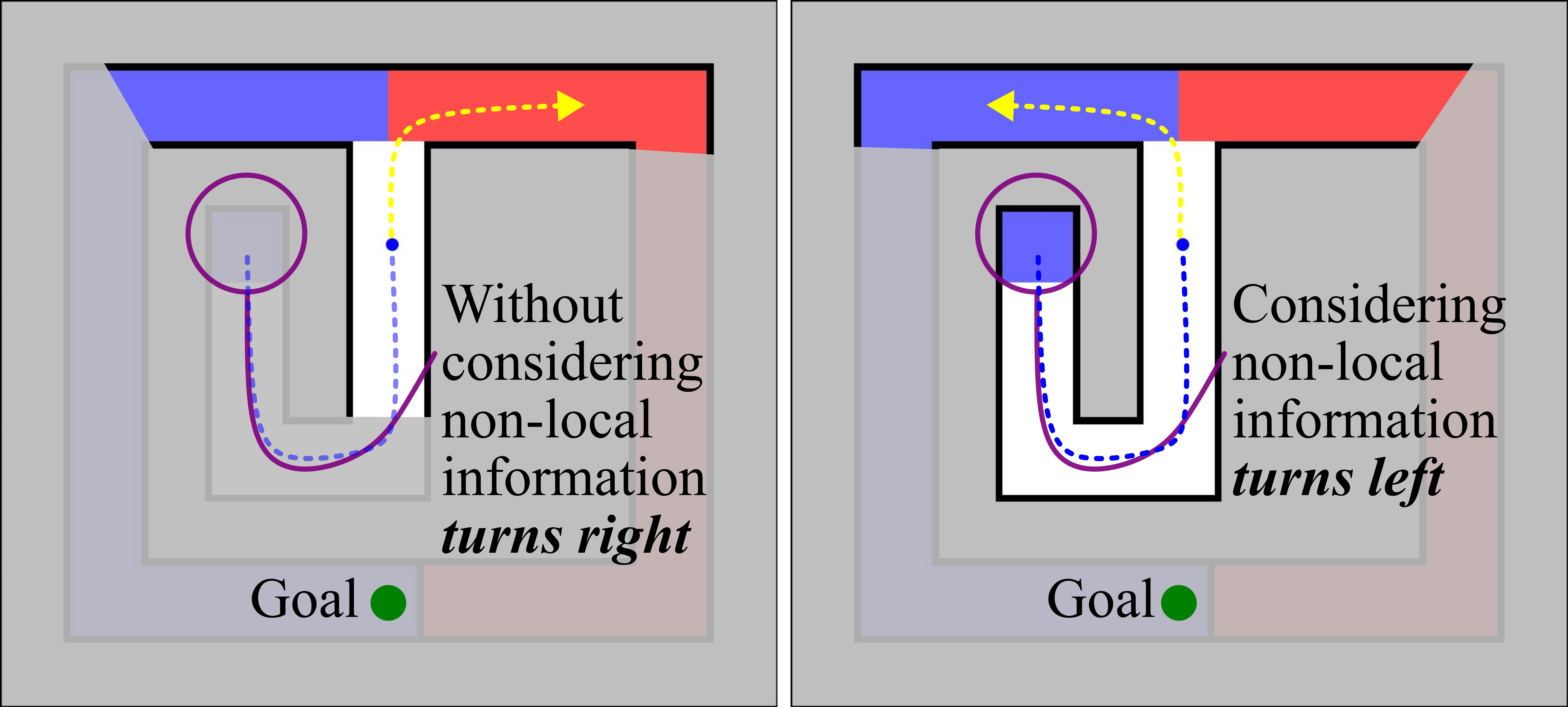

Consider the following scenario, in which the robot starts in a location where it must decide whether to head left or right at an intersection, yet lacks the information it needs to determine which way it should go. If the robot remembers what lies around the corner—a region whose color indicates which hallway it should follow—it can make more effective decisions about where to head and reach the goal more quickly.

The robot cannot effectively decide where to go if it cannot make use of the non-local information from its starting location in the center of the map.

Challenge: reliable navigation under uncertainty that leverages non-local information

To improve long-horizon navigation in partiallly-mapped environments, we want an approach that:

(1)is reliable, guarantee to reach the goal when there exists a solution, and(2)has the capacity to make use of non-local information to make prediction about unseen space to guide navigation

Many learning-driven approaches—including many model-free approaches trained via deep reinforcement learning—have demonstrated the capacity to perform well in this domain. However, in the absence of an explicit map, many of these approaches are unreliable and so lack a guarantee to reach the goal.

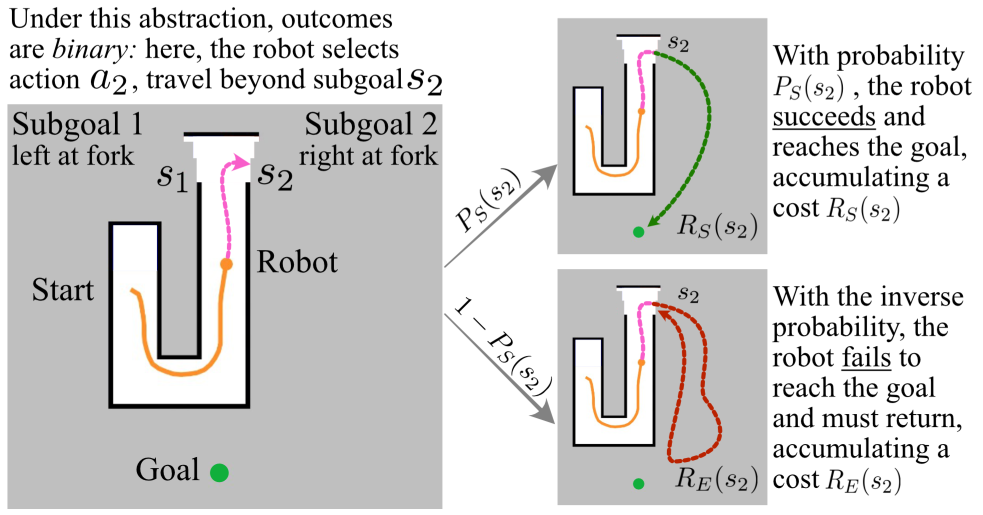

Learning over Subgoals Planning The recent Learning over Subgoals Planning (LSP) approach of Stein et al. (2018) introduces a high-level action abstraction for planning in a partial map where actions represent exploring boundaries beyond known space into unseen space. This abstraction allows for both state-of-the-art performance when planning for long horizon and reliability-by-design. However, LSP is limited: its ability to make predictions about unseen space only makes use of locally observable information, limiting its performance.

Approach

1Use the reliable-by-design model-based LSP planning abstraction2Create a graph representation for non-local information and use a graph neural network (GNN) for learning to inform good behavior

We maintain reliability using LSP action abstraction

LSP uses high-level action abstraction to navigate long-horizon efficiently under uncertainty. This model-based planning abstraction alleviates the computational requirements of POMDP planning. For LSP planning, actions available to the robot have binary outcomes of either reaching the goal and incurring a success cost or failing and accumulating exploration cost.

Under this abstraction, the expected cost of a high-level action is determined via a Bellman Equation:

\[Q(\{m_t, q_t\}, a_t\in \mathcal{A}) = D(m_t, q_t, a_t) + P_S(a_t) R_S(a_t) + (1-P_S(a_t)) \left[R_E(a_t) + \min_{a_{t+1}}Q(\{m_t, q(a_t)\},a_{t+1}) \right]\]where $D$ is the known space travel cost from robot pose $q_t$ with the partial map $m_t$ by taking the action $a_t$, $P_S$ is the likelihood of success for an action, $R_S$ is the expected success cost if goal can be reached, and $R_E$ is the expected exploration cost otherwise.

For more details on how the Learning over Subgoal Planning approach works, please see our blog post.

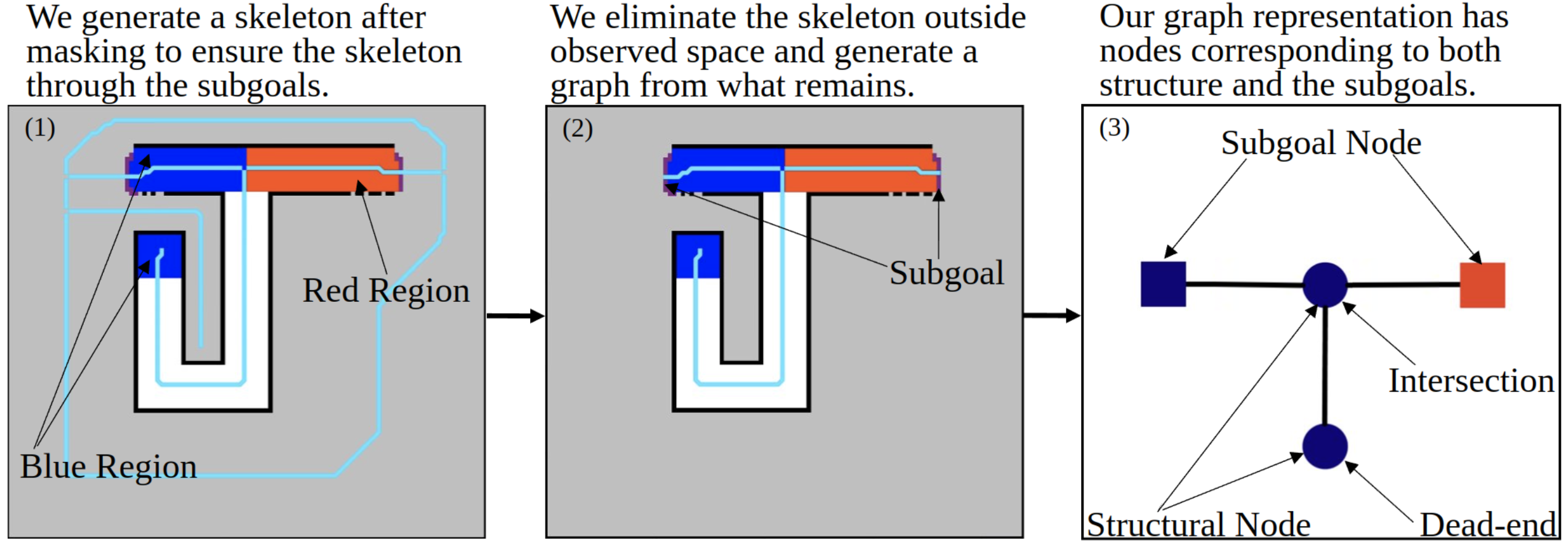

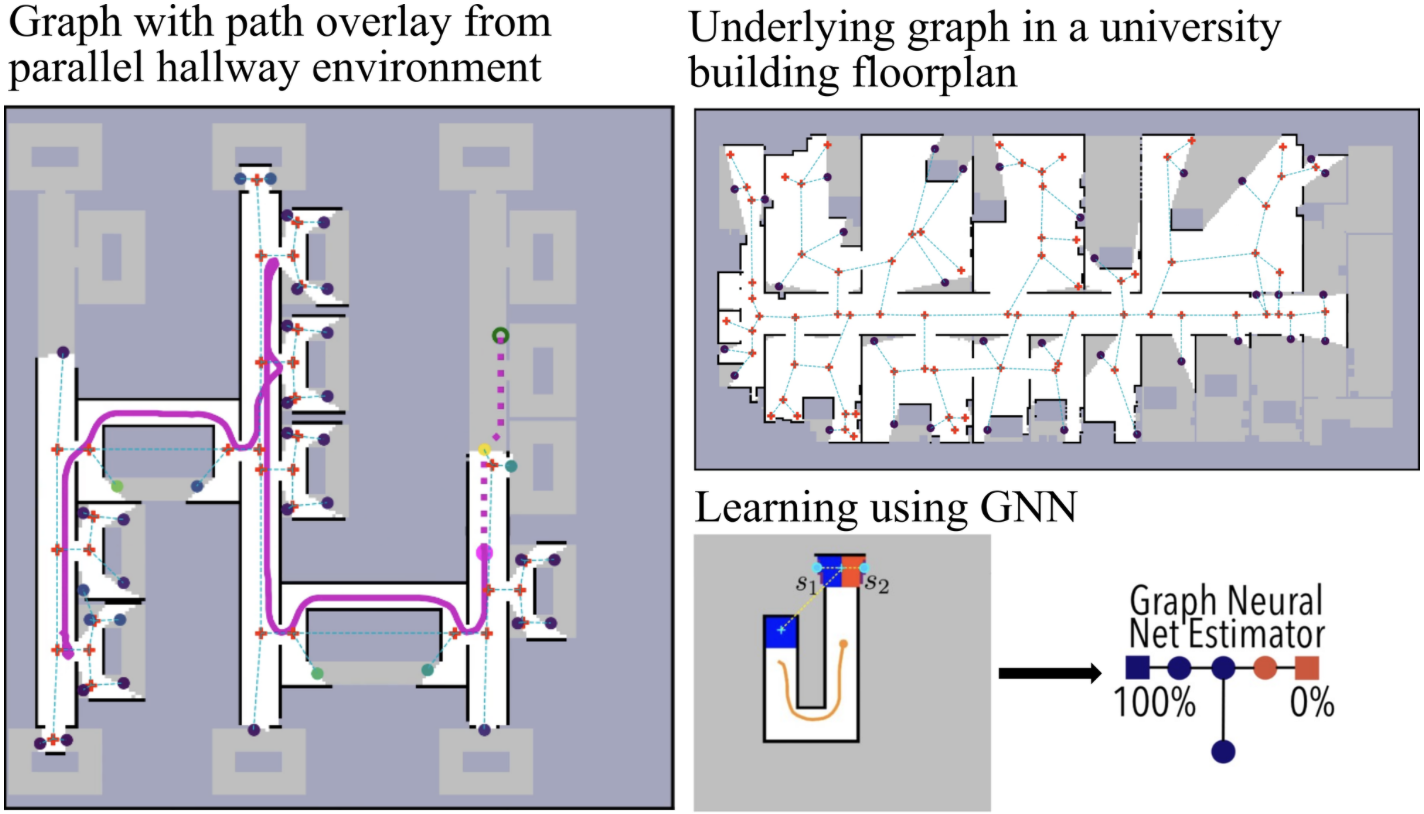

We compute a graph representation of the map for learning via a graph neural network (GNN)

To learn from non-local information, we generate a simplified (few-node) graph of the environment using a skeletonization process that preserves the high-level topological structure, where nodes exist at (i) intersections, (ii) dead-ends, and (iii) subgoals. Then we feed the graph into a Graph Neural Network (GNN) to estimate the subgoal properties.

Here, we show a short video of the robot navigating through the environment alongside a graph representation of the environment:

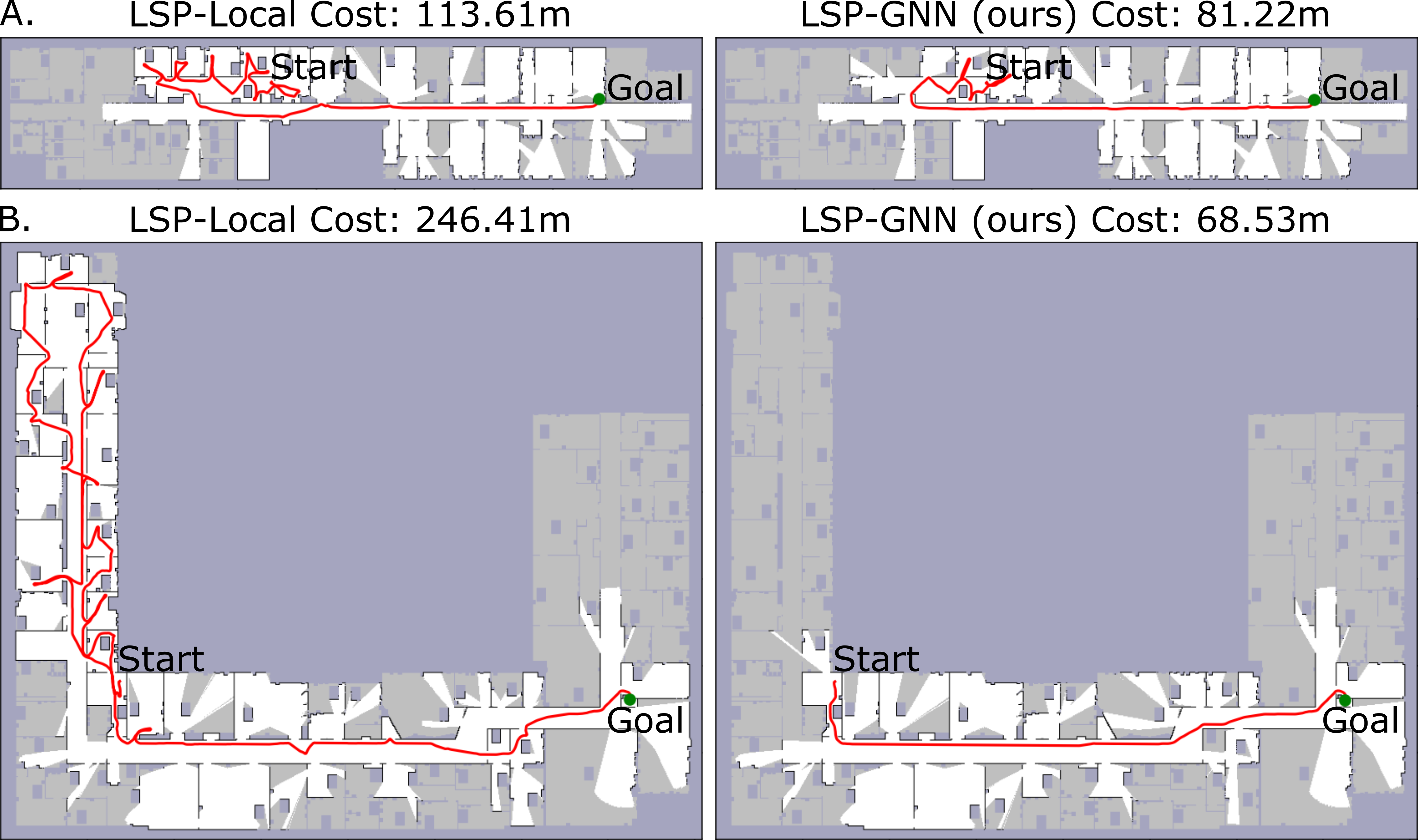

Result: our approach reaches the goal quickly

Our approach learns how a dead-end room looks like and uses this non-local information to improve its prediction avoiding it later on to quickly reach the goal.

We include results on a simulated robot in both procedurally-generated hallway-like environments and also in environments generated from occupancy maps of floorplans around MIT’s campus. In all environments, our LSP-GNN approach outperforms both learned and non-learned baselines.

See our paper for additional results.

Conclusion & References

We present a reliable model-based planning approach that uses a graph neural network to estimate the goodness of goal- directed high-level actions from both local and non-local information, improving navigation under uncertainty. Our planning approach takes advantage of non-local information to improve predictions about unseen space and quickly reach the unseen goal.

In future work, we envision passing more complex sensory input to the robot, allowing it to estimate the goodness of its actions using information collected from image sensors or semantically-segmented images.

Read our full paper or feel free to explore our code on GitHub.

References

- Gregory J. Stein, Christopher Bradley, and Nicholas Roy. “Learning over Subgoals for Efficient Navigation of Structured, Unknown Environments”. In: Conference on Robot Learning (CoRL). 2018. paper, talk (14 min).

- Raihan Islam Arnob and Gregory J. Stein. “Improving Reliable Navigation under Uncertainty via Predictions Informed by Non-Local Information.” In: International Conference on Intelligent Robots and Systems (IROS). 2023. paper.